The rise of ungoverned AI use is introducing traceability gaps across regulated processes, signaling the next major challenge for compliance and audit readiness.

Organizations have always feared what they can’t see. For decades, compliance teams built entire systems around visibility: documented processes, controlled procedures, structured approvals, traceable decisions. The goal was simple. If something ever went wrong, the organization could follow a clean trail back to the truth.

But over the last two years, something subtle has changed inside organizations, something that now threatens the very idea of traceability. It’s quiet, fast-moving, and already embedded in everyday work. Employees are using AI in ways leaders never intended, and often never notice. What’s emerging is not a technology problem, but a new form of compliance risk hiding in plain sight.

This phenomenon has a name: AI Shadow Compliance. And it may define the next decade of regulatory oversight.

A New Kind of Invisible Risk

To understand why this matters, it helps to look at how quickly AI has become woven into daily routines. Employees are not waiting for corporate policy or IT approval. They are turning to generative AI the way a previous generation turned to calculators, spell-check, and Google Search. When the pressure is on, when deadlines loom, when a procedure feels too dense or a CAPA narrative feels too technical, AI becomes a quiet companion. A fixer. A translator. A shortcut.

No one announces they’re using it. They just do.

It starts harmlessly: “Rewrite this in clearer language.”

Then: “Summarize this deviation report.”

Then: “Draft an initial root cause analysis based on these facts.”

Then, without noticing, the employee is pasting the AI-generated output directly into a controlled record.

And the system, your system, cannot see any of it.

This is the heart of AI Shadow Compliance.

Not that AI exists, but that it exists unseen, influencing regulated work without governance, oversight, or traceable logic. Compliance leaders assume their documentation is accurate because the workflow was followed. But the workflow has become porous. AI slips in between steps, rewriting the narrative, refining the explanation, adjusting the logic.

The document may look better. It may read more clearly. But its origin is no longer purely human, nor is it auditable. And that matters.

Why Shadow AI Emerges So Easily

Employees are not acting recklessly. Most believe they’re helping. They see AI as a productivity tool—a modern assistant that saves time and improves clarity. And, in truth, it does. The problem is not the motivation; it’s the invisibility.

AI becomes a shadow actor because of three forces working together.

First, work is simply too much for too few. Compliance teams are stretched. Quality teams are understaffed. Process owners are juggling more than ever. When AI offers speed, they take it.

Second, AI feels harmless. It doesn’t demand login credentials. It doesn’t challenge authority. It’s just a box you type into. People assume that if they’re not sharing confidential information, they’re safe.

Third, organizations have not yet built governance structures for generative output. They address data privacy risks but forget the equally dangerous risks of traceability, reproducibility, and accuracy.

As a result, AI becomes a silent collaborator—reshaping SOP paragraphs, rewriting risk rationales, and summarizing audit findings in ways that may be elegant, but not necessarily correct.

The Regulators Are Watching, Even Quietly

Many leaders comfort themselves by believing regulators are far from cracking down on AI usage. But this belief is starting to look more like denial than strategy. Across the globe, regulatory bodies have begun to articulate concerns that go far beyond data protection. They are questioning explainability, transparency, reproducibility, and the origins of decisions.

The EU AI Act, FDA draft reflections, EMA guidance, and NIST frameworks may differ in approach, but they share one core expectation: organizations must be able to show how decisions were made, especially when AI was involved.

In the context of controlled documentation, this is seismic.

Imagine an auditor, three years from now, asking a simple question:

“Who authored this root cause explanation?”

If an employee used AI to restructure the logic, remove details, or introduce new ones, who takes responsibility? And how can the organization prove the reasoning if the AI’s output cannot be regenerated?

The truth is uncomfortable: many organizations would have no answer. They might not even know AI was involved.

Auditors have always rewarded transparency. They will not accept decisions whose origins cannot be demonstrated.

The Dangerous Comfort of “It Looks Good”

One of AI’s greatest strengths is also its most dangerous trait: it produces content that sounds confident. It produces explanations that feel complete. And when a document “looks good,” reviewers often let their guard down.

In traditional compliance processes, poor writing often acts as a signal. A vague CAPA narrative, a rambling deviation summary, or an unclear SOP section invites scrutiny. But AI removes those signals. It replaces human imperfection with polished coherence.

This creates a false sense of security.

A deviation report may now be shorter, clearer, and structurally consistent, yet missing critical context.

An SOP may read more logically, yet reflect a procedural variation that was never formally approved.

A risk explanation may appear grounded, yet reference factors that were never validated.

AI doesn’t simply improve clarity. It reshapes content. And reshaping content without governance is rewriting history.

The Fragility of Reproducibility

A foundational principle of compliance is reproducibility.

If data, logic, or explanation cannot be reproduced, the integrity of the system collapses.

AI, by design, is non-deterministic. The same prompt today may generate a different answer tomorrow. In regulated operations, variance is not a feature; it’s a flaw.

Imagine trying to reconstruct why a decision was made during an investigation, only to discover the rationale came from an AI tool that cannot generate the same justification again. The organization is left with a compliance black hole: a decision that cannot be retraced.

Regulators do not accept black holes.

They expect organizations to understand not only what decisions were made, but why. And the “why” must be evidence-based, not probabilistic.

The Coming Shift in Audit Culture

We often view audits as backward-looking events: an examination of what has happened. But AI will force audits to become more forward-facing. The focus will shift from documentation quality to documentation lineage.

The new audit questions will sound different. Instead of:

“Where is this procedure approved?” Auditors will ask:

“How was this text generated, and by whom?”

Instead of:

“Show me your deviation analysis steps.”

They will ask:

“Can you demonstrate the reasoning path behind this conclusion?”

Instead of:

“Was training completed?”

They will ask:

“How do you ensure that AI did not perform steps reserved for trained personnel?”

This is not science fiction.

This is the predictable evolution of compliance theory: as tools evolve, so must governance.

Organizations that prepare for these questions now will be the ones that thrive later.

A Hard Truth: AI Is Not the Risk – Blindness Is

Despite everything above, AI itself is not inherently dangerous. It is no more risky than spreadsheets, macros, or open-source templates, technologies that once also felt disruptive.

The real risk emerges when leaders cannot see how AI is influencing controlled work.

Shadow usage breaks traceability.

Traceability breaks accountability.

Accountability breaks compliance.

In the absence of visibility, compliance becomes a guessing game.

But when AI is brought into the light, when it becomes governed, tracked, and embedded within controlled systems, it stops being a threat and becomes what it was always meant to be: an accelerator.

The organizations that win will not be those who fear AI, nor those who adopt it blindly.

They will be the ones who understand that trustworthy AI requires trustworthy infrastructure.

And that brings us to the role of Interfacing.

How Interfacing Helps Bring Shadow AI Into the Light

Interfacing’s approach to AI governance begins with a simple principle: compliance must evolve as fast as technology does.

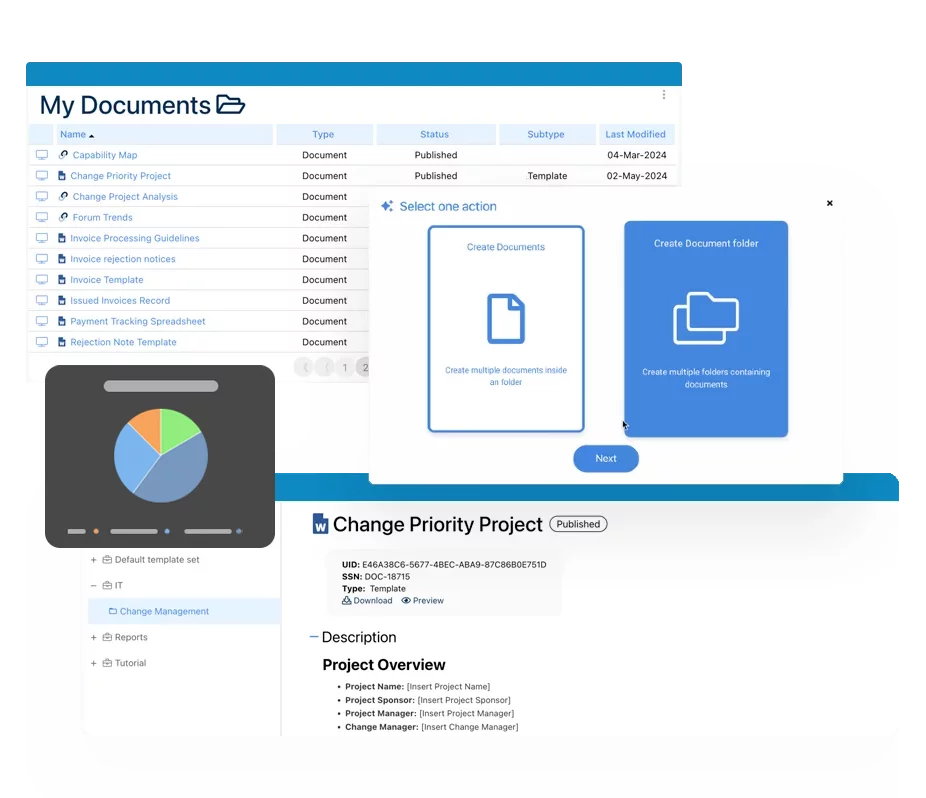

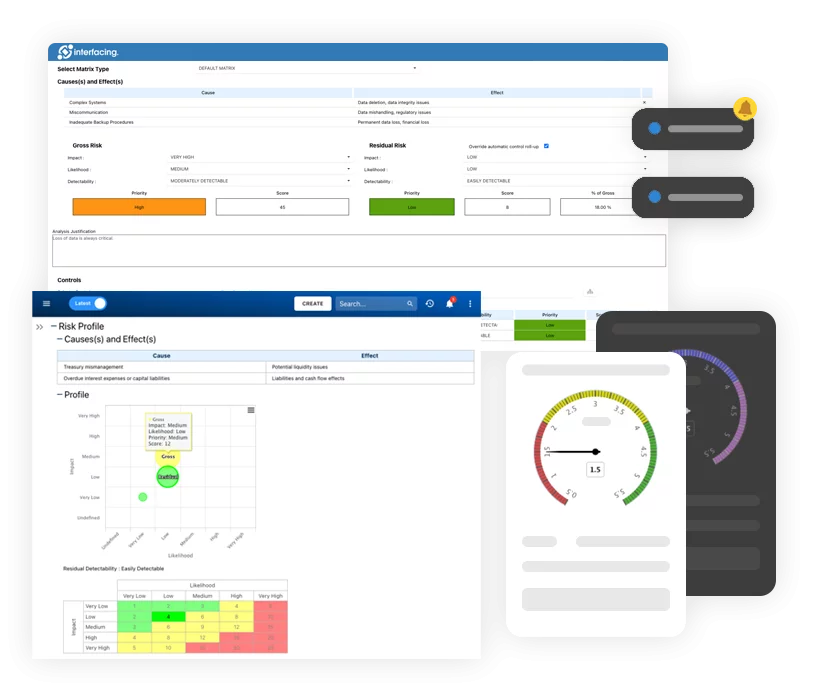

Rather than bolt AI tools onto old processes, Interfacing integrates AI governance directly into the organization’s architecture, documentation, change control, regulatory intelligence, risk frameworks, and the full Integrated Management System.

This changes the game.

When employees generate content, the system can capture lineage.

When documentation evolves, the system can show how and why.

When a regulator asks for traceability, the organization can demonstrate it without hesitation.

Interfacing’s Digital Twin Organization (DTO) does more than map processes. It reflects how AI interacts with them, where decisions originate, and how changes ripple across roles, controls, risks, and procedures. This gives leaders something they’ve been missing: visibility.

Interfacing’s AI-driven impact analysis reveals deviations that don’t belong, logic shifts that occurred silently, and edits that don’t match previous patterns, signals that AI may have influenced the content.

Instead of retroactively guessing what happened, organizations get proactive intelligence about what is changing and why.

And because Interfacing’s platform is built for regulated environments, with validated environments, full audit trails, encryption, segregation of duties, controlled workflows, and secure lifecycle management, the use of AI becomes part of the compliance fabric rather than an ungoverned leak within it.

The result is simple but powerful:

AI becomes accountable.

Documentation remains trustworthy.

Compliance gets stronger, not weaker.

Through structured workflows, lineage tracking, and a unified system of record, organizations using Interfacing can let employees embrace AI—without fearing the shadows.

The Future Belongs to Organizations Who Prepare Now

The rise of AI Shadow Compliance is not a crisis; it is a turning point. Every major shift in technology, from electronic signatures to cloud systems to automated workflows, created fear before it created structure. The organizations that thrived were those that built governance early, before regulators forced the issue.

AI is no different.

Right now, organizations have a choice:

Let AI reshape their compliance landscape invisibly, or bring AI under governance and transform it into a strength.

The winners will be those who choose visibility.

AI will not replace compliance.

But compliance that embraces AI, openly, transparently, and intelligently, will replace the compliance models that refuse to change.

Interfacing helps organizations make that transition with confidence, clarity, and control.

And in a world where AI may write the first draft of tomorrow’s documentation, the organizations that stay transparent will be the ones regulators trust most.

Why Choose Interfacing?

With over two decades of AI, Quality, Process, and Compliance software expertise, Interfacing continues to be a leader in the industry. To-date, it has served over 500+ world-class enterprises and management consulting firms from all industries and sectors. We continue to provide digital, cloud & AI solutions that enable organizations to enhance, control and streamline their processes while easing the burden of regulatory compliance and quality management programs.

To explore further or discuss how Interfacing can assist your organization, please complete the form below.

Documentation: Driving Transformation, Governance and Control

• Gain real-time, comprehensive insights into your operations.

• Improve governance, efficiency, and compliance.

• Ensure seamless alignment with regulatory standards.

eQMS: Automating Quality & Compliance Workflows & Reporting

• Simplify quality management with automated workflows and monitoring.

• Streamline CAPA, supplier audits, training and related workflows.

• Turn documentation into actionable insights for Quality 4.0

Low-Code Rapid Application Development: Accelerating Digital Transformation

• Build custom, scalable applications swiftly

• Reducing development time and cost

• Adapt faster and stay agile in the face of

evolving customer and business needs.

AI to Transform your Business!

The AI-powered tools are designed to streamline operations, enhance compliance, and drive sustainable growth. Check out how AI can:

• Respond to employee inquiries

• Transform videos into processes

• Assess regulatory impact & process improvements

• Generate forms, processes, risks, regulations, KPIs & more

• Parse regulatory standards into requirements

Request Free Demo

Document, analyze, improve, digitize and monitor your business processes, risks, regulatory requirements and performance indicators within Interfacing’s Digital Twin integrated management system the Enterprise Process Center®!

Trusted by Customers Worldwide!

More than 400+ world-class enterprises and management consulting firms